Many customers from different industries are facing challenges with increasing business agility, being prepared for dynamic markets that are in constant change and achieving business goals within budgets.

Furthermore, there is a need to reduce the burden on the internal teams by automating processes and keeping them consistent across different and distributed environments, thus reducing teams' overhead, decreasing time-to-market, raising productivity and improving security posture.

With Red Hat OpenShift,OpenShift Pipelines/OpenShift GitOps are already included in the OpenShift offerings, customers can achieve consistency in their continuous integration / continuous deployment (CI/CD) processes, automating from source code to production across different cloud service providers (CSPs) and in hybrid environments. This helps customers be ready for a multicloud strategy by shifting workloads to the cloud as business needs evolve.

Customers can focus on their business goals leveraging Microsoft Azure Red Hat OpenShift, Red Hat OpenShift on AWS and Red Hat OpenShift Dedicated on Google Cloud as an application platform.

This enables internal teams to work on adding value rather than managing infrastructure and tools, freeing the team from managing day-to-day infrastructure and giving them greater ability to focus on long-term innovation.

This blog series also includes:

- Migrating to OpenShift Pipelines and integrating continuous deployment

- A guide to integrating Red Hat OpenShift GitOps with Microsoft Azure DevOps

Examples in these posts use the following technologies:

- Azure Red Hat OpenShift/Red Hat OpenShift Service on AWS/Red Hat OpenShift Dedicated 4.12+ installed

- OpenShift Pipelines 1.12 installed

- OpenShift GitOps 1.10 installed

- A Quarkus application source code

- Azure Container Registry as an image repository

- Azure DevOps Repository as a source repository

Start the journey

This is the first of a series of three articles that show how to integrate an existing CI Azure DevOps Pipeline in a wider scenario that might include a managed OpenShift cloud service, like Azure Red Hat OpenShift or OpenShift Service on AWS pipelining with OpenShift Pipelines, and delivery with OpenShift GitOps.

The article covers deploying a Quarkus application with Azure DevOps Pipeline on an Azure Red Hat OpenShift cluster.

The following prerequisites and assumptions are made in these demos:

- The OS is a Fedora Linux distribution

- An Azure DevOps workspace

- An Azure Container Registry (ACR) within the same Azure Subscription used with Azure DevOps

- A Docker Registry Service Connection between the ACR and the Azure DevOps Project through the Subscription ("Service Principal" authentication type from the drop-down menu).

Get to the source

The demonstration uses Quarkus to build the source code. Check Step 1 of the Getting Started section to install the necessary binaries on your laptop or workstation.

Once you install Quarkus, run the following command to create your app:

quarkus create app com.devops:azure:1.0 --extension='resteasy-reactive-qute'This will create a directory called azure in your current working directory structured as follows:

/home/mplacidi/azure

├── src

│ └── main

│ ├── docker

│ │ ├── Dockerfile.jvm

│ │ ├── Dockerfile.legacy-jar

│ │ ├── Dockerfile.native

│ │ └── Dockerfile.native-micro

│ ├── java

│ │ └── com

│ │ └── devops

│ │ └── SomePage.java

│ └── resources

│ ├── META-INF

│ │ └── resources

│ │ └── index.html

│ ├── templates

│ │ └── page.qute.html

│ └── application.properties

├── mvnw

├── mvnw.cmd

├── pom.xml

└── README.mdYou can test your application by running the following command in the ./azure directory:

quarkus devThis command launches the application in the foreground. Open a browser and visit http://localhost:8080 to check it out.

Now that you've verified your code, push it to your Azure DevOps Organization Repository.

Push it to the cloud

With all components in place, move on to the pipeline build:

trigger:

- master

pool:

vmImage: ubuntu-latest

steps:

- task: Maven@3

inputs:

mavenPomFile: 'pom.xml'

mavenOptions: '-Xmx4096m'

javaHomeOption: 'JDKVersion'

jdkVersionOption: '1.17'

jdkArchitectureOption: 'x64'

publishJUnitResults: false

testResultsFiles: '**/surefire-reports/TEST-*.xml'

goals: 'package'

options: '-Dnative'

- task: CopyFiles@2

inputs:

targetFolder: '$(Build.ArtifactStagingDirectory)'

- task: Docker@2

inputs:

containerRegistry: 'aromplacidi'

repository: 'quarkus/azure'

command: 'buildAndPush'

Dockerfile: '**/Dockerfile.native'

buildContext: '$(Build.ArtifactStagingDirectory)'

tags: |

latest

$(Build.BuildId)The tasks in detail are:

Maven@3: Select the default Maven build task from the Assistant, then modify the jdkVersionOption field with the Java version used to create the Quarkus app (in my case, Java 1.17). Add the option -Dnative.

CopyFiles@2: This step is necessary when using the native build mode. The option allows you to successfully copy the outcome of the Maven@3 task into the specified directory, which is referenced in the Docker@2 step.

Docker@2: This step builds and pushes the image to a Docker registry. I've configured a Service Connection so the pipeline can leverage resources from the Subscription itself, like the ACR, without needing to enter credentials, etc.

I've added the buildContext field with the same value as CopyFiles@2 and the tags with both Build.BuildId parameter (the default) and latest, so the latter build will always be pushed as latest into the registry.

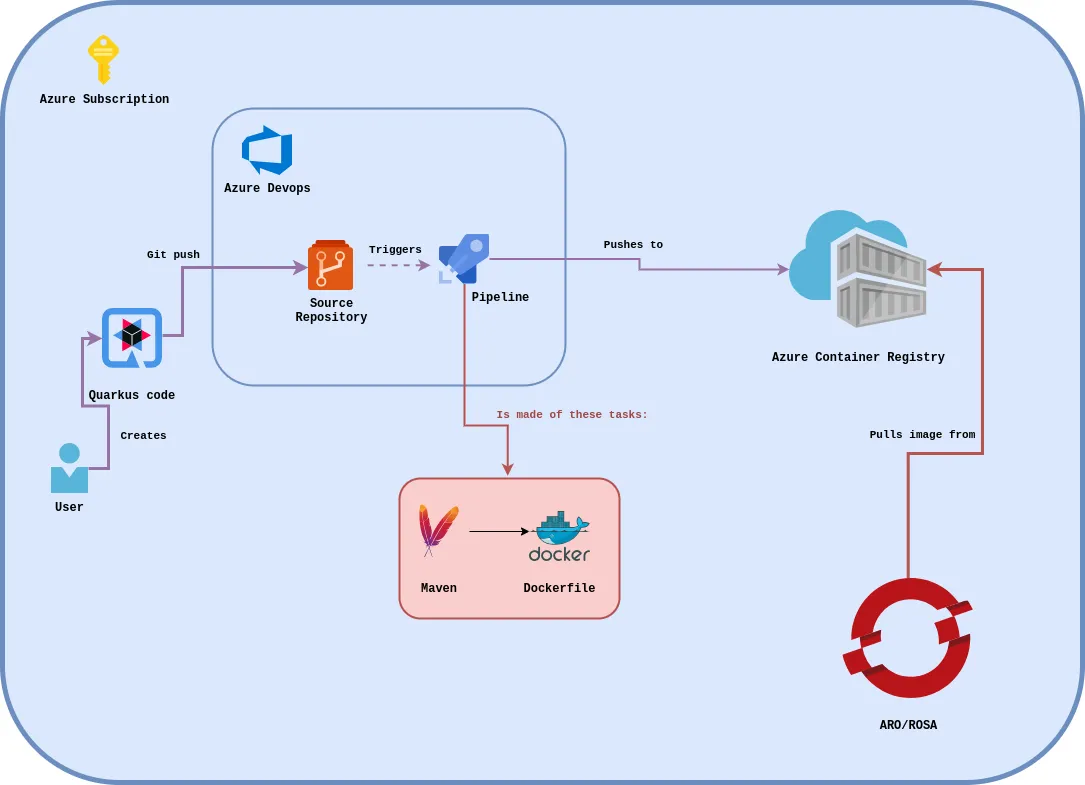

The following is a graphical representation of the entire workflow:

The results are in

Once you run the pipeline, you'll have a built Docker image in the configured destination Docker registry. A managed OpenShift cluster can consume that image.

Consider that if you push the image to an external registry and you plan to have an automatic rolling update of your application, you'll need to import that image in an imageStream with the --scheduled parameter, so that it will be checked every 15 minutes for any upstream changes:

oc import-image ${image_name} --from ${container_registry}/${container_repository}/${container_image}:${image_tag} --confirm --scheduledThe following is an example of what I used for this blog (note that it has been dismissed).

oc import-image azure --from aromplacidi.azurecr.io/quarkus/azure:latest --confirm --scheduledNote: In case of a private registry (i.e., requires credentials), you must configure a pulling secret with the proper options:

oc create secret docker-registry --docker-server=${container_registry_server} --docker-username=${username} --docker-password=${token_or_password} --docker-email=unused ${name_of_the_secret} -n ${project}Next, link it to the default service account:

oc secrets link default ${name_of_the_secret} --for=pull -n ${project}If you don't, you'll have to import it to the imageStream again to instantiate any imageChange triggers. Another option is to manually trigger the rollout if you've directly referenced the latest tag of the external image in the deployment/deploymentConfig/else.

What's next?

As mentioned above, this is the first of three articles guiding you through CI/CD, DevOps and GitOps scenarios. The next article, Migrating to OpenShift Pipelines and integrating continuous deployment, deals with pipelining and triggers.

執筆者紹介

Angelo has been working in the IT world since 2008, mostly on the infra side, and has held many roles, including System Administrator, Application Operations Engineer, Support Engineer and Technical Account Manager.

Since joining Red Hat in 2019, Angelo has had several roles, always involving OpenShift. Now, as a Cloud Success Architect, Angelo is providing guidance to adopt and accelerate adoption of Red Hat cloud service offerings such as Microsoft Azure Red Hat OpenShift, Red Hat OpenShift on AWS and Red Hat OpenShift Dedicated.

Gianfranco has been working in the IT industry for over 20 years in many roles: system engineer, technical project manager, entrepreneur and cloud presales engineer before joining the EMEA CSA team in 2022. His interest is related to the continued exploration of cloud computing, which includes a plurality of services, and his goal is to help customers succeed with their managed Red Hat OpenShift solutions in the cloud space. Proud husband and father. I love cooking, traveling and playing sports when possible (e.g. MTB, swimming, scuba diving).

Marco is a Cloud Success Architect, delivering production pilots and best practices to managed cloud services (ROSA and ARO in particular) customers. He has been at Red Hat since 2022, previously working as an IT Ops Cloud Team Lead then as Site Manager for different customers in the Italian Public Administration sector.

チャンネル別に見る

自動化

テクノロジー、チームおよび環境に関する IT 自動化の最新情報

AI (人工知能)

お客様が AI ワークロードをどこでも自由に実行することを可能にするプラットフォームについてのアップデート

オープン・ハイブリッドクラウド

ハイブリッドクラウドで柔軟に未来を築く方法をご確認ください。

セキュリティ

環境やテクノロジー全体に及ぶリスクを軽減する方法に関する最新情報

エッジコンピューティング

エッジでの運用を単純化するプラットフォームのアップデート

インフラストラクチャ

世界有数のエンタープライズ向け Linux プラットフォームの最新情報

アプリケーション

アプリケーションの最も困難な課題に対する Red Hat ソリューションの詳細

オリジナル番組

エンタープライズ向けテクノロジーのメーカーやリーダーによるストーリー

製品

ツール

試用、購入、販売

コミュニケーション

Red Hat について

エンタープライズ・オープンソース・ソリューションのプロバイダーとして世界をリードする Red Hat は、Linux、クラウド、コンテナ、Kubernetes などのテクノロジーを提供しています。Red Hat は強化されたソリューションを提供し、コアデータセンターからネットワークエッジまで、企業が複数のプラットフォームおよび環境間で容易に運用できるようにしています。

言語を選択してください

Red Hat legal and privacy links

- Red Hat について

- 採用情報

- イベント

- 各国のオフィス

- Red Hat へのお問い合わせ

- Red Hat ブログ

- ダイバーシティ、エクイティ、およびインクルージョン

- Cool Stuff Store

- Red Hat Summit