In Red Hat OpenShift we have always tried to provide a great observability experience out of the box through first class open source projects like Prometheus, Vector, Jaeger, Grafana Tempo and others. We have now added support for OpenTelemetry to the platform which enables customers to use the OpenTelemetry collector, auto-instrumentation injection and OpenTelemetry protocol (OTLP).

Supporting the OTLP protocol is very important for system interoperability. Most of the software-as-a-service (SaaS) observability vendors support OTLP as an ingestion protocol. Another facet of OpenTelemetry is decoupling data collection from storage. This architecture helps avoid vendor lock-in, and provides unified cross-signal capabilities for data collection, processing and forwarding to the observability backend.

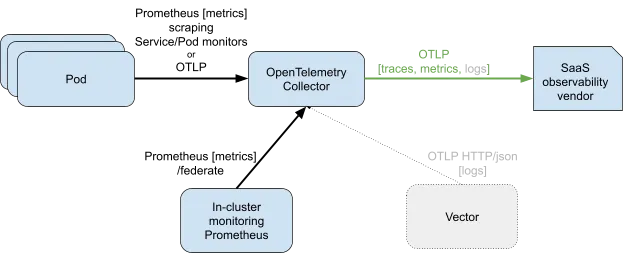

In this article we explore how the OpenTelemetry protocol can be used on OpenShift to ingest in-cluster data (workloads instrumented with OpenTelemetry) and how telemetry data can be exported from OpenShift via OTLP.

In-cluster OTLP ingestion

On OpenShift, the installation of the Red Hat build of OpenTelemetry operator allows you to ingest OTLP traces, metrics and logs. From the collector, the data can be sent to the in-cluster telemetry storage (Tempo for traces and Prometheus for metrics) or any external system that supports OTLP ingestion (see the next section for more details).

Observability needs are constantly evolving and unified configuration decreases maintenance burden and provides a stronger security posture. Placing an OpenTelemetry collector in front of the telemetry backend clearly separates data collection from storage. This architecture enables users to apply unified filtering and routing configuration for all signals, and to easily maintain and change the configuration over time. The routing enables users to better manage cost and decide which data is stored in cheaper in-cluster storage or exported to SaaS vendors.

Configuration

The following OpenTelemetry collector configuration enables:

- the OTLP receiver for ingesting OTLP messages over gRPC or HTTP

- the OTLP exporter for sending traces to Tempo

- the Prometheus exporter for exporting metrics to the user-workload monitoring stack

apiVersion: opentelemetry.io/v1alpha1

kind: OpenTelemetryCollector

metadata:

name: otel

namespace: observability

spec:

mode: deployment

observability:

metrics:

enableMetrics: true

config: |

receivers:

otlp:

protocols:

grpc:

http:

exporters:

otlp/tempo:

endpoint: "tempo-simplest-distributor:4317"

tls:

insecure: true

prometheus:

endpoint: 0.0.0.0:8889

resource_to_telemetry_conversion:

enabled: true # by default resource attributes are dropped

service:

pipelines:

traces:

receivers: [otlp]

exporters: [otlp/tempo]

metrics:

receivers: [otlp]

exporters: [prometheus]

Traces

Traces can be forwarded to an in-cluster Tempo instance via OTLP protocol.

Metrics

Metrics from the collector can be sent to the OpenShift user-workload monitoring stack via the Prometheus exporter by scraping the collector. The alternative will be to use the Cluster Observability Operator and push the metrics in via Prometheus experimental OTLP HTTP API. However this feature is not supported at the time of writing this article.

The collector CR spec.observability.metrics.enableMetrics instructs the operator to create a service monitor for scraping the collector’s Prometheus exporter.

Logs

At the moment Loki does not support ingesting logs via OTLP, but the upstream OpenTelemetry collector contrib contains Loki push exporter (RFE for adding LokiExporter to Red Hat collector distribution 3.2). OpenShift users can use the community build of the OpenTelemetry collector contrib repository to use the Loki exporter.

The Loki exporter is not included in the Red Hat build of OpenTelemetry (as of version 3.1).

Out-cluster OTLP export

Exporting all telemetry signals is only partially supported. The metrics and logging stacks on OpenShift do not support OTLP sinks, but workaround solutions are available.

Configuration

The following configuration deploys an OpenTelemetry collector as a StatefulSet which enables OTLP receiver, Prometheus receiver and Target Allocator (TA). The TA can scrape Prometheus metrics from workloads configured with Service and Pod monitors. The Prometheus receiver scrapes the federate endpoint of the in-cluster monitoring stack. Before applying the config, create the role-based access control (RBAC) needed by the collector’s Prometheus target allocator.

# Give collectors RBAC to access metrics

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: otel-collector

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-monitoring-view

subjects:

- kind: ServiceAccount

name: otel-collector

namespace: observability

---

# TLS for prometheus receiver

kind: ConfigMap

apiVersion: v1

metadata:

name: cabundle

annotations:

service.beta.openshift.io/inject-cabundle: "true"

---

apiVersion: opentelemetry.io/v1alpha1

kind: OpenTelemetryCollector

metadata:

name: otel

namespace: observability

spec:

mode: statefulset

targetAllocator:

enabled: true

prometheusCR:

enabled: true

serviceMonitorSelector:

app: frontend

volumeMounts:

- name: cabundle-volume

mountPath: /etc/pki/ca-trust/source/service-ca

readOnly: true

volumes:

- name: cabundle-volume

configMap:

name: cabundle

config: |

exporters:

otlp:

endpoint: https://observability-vendor.com:4317

prometheus:

endpoint: 0.0.0.0:8989

metric_expiration: 10m

receivers:

otlp:

protocols:

grpc:

http:

prometheus:

config:

scrape_configs:

- job_name: 'federate'

scrape_interval: 15s

scheme: https

tls_config:

ca_file: /etc/pki/ca-trust/source/service-ca/service-ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

honor_labels: false

params:

'match[]':

- ‘{__name__="cluster:usage:containers:sum"}’

- ‘{__name__=~"cluster:cpu_usage_cores:sum|cluster:memory_usage_bytes:sum"}’

- ‘{__name__=~"workload:cpu_usage_cores:sum|workload:memory_usage_bytes:sum"}’

- ‘{__name__="namespace_memory:kube_pod_container_resource_limits:sum|namespace_memory:kube_pod_container_resource_requests:sum|namespace_cpu:kube_pod_container_resource_limits:sum|namespace_cpu:kube_pod_container_resource_requests:sum|"}’

metrics_path: '/federate'

metric_relabel_configs:

- action: labeldrop

regex: ‘(prometheus_replica|prometheus)’

static_configs:

- targets:

- "prometheus-k8s.openshift-monitoring.svc.cluster.local:9091"

service:

telemetry:

metrics:

address: ":8888"

pipelines:

traces:

receivers: [otlp]

exporters: [otlp]

metrics:

receivers: [otlp, prometheus]

exporters: [otlp, prometheus]

The collector also enables Prometheus exporter which can be locally port-forwarded to see the scraped metrics:

# HELP cluster_cpu_usage_cores_sum

# TYPE cluster_cpu_usage_cores_sum gauge

cluster_cpu_usage_cores_sum{instance="prometheus-k8s.openshift-monitoring.svc.cluster.local:9091",job="federate"} 2.692632467806617

# HELP cluster_memory_usage_bytes_sum

# TYPE cluster_memory_usage_bytes_sum gauge

cluster_memory_usage_bytes_sum{instance="prometheus-k8s.openshift-monitoring.svc.cluster.local:9091",job="federate"} 1.2121485312e+10

# HELP cluster_usage_containers_sum

# TYPE cluster_usage_containers_sum gauge

cluster_usage_containers_sum{instance="prometheus-k8s.openshift-monitoring.svc.cluster.local:9091",job="federate"} 506

# HELP scrape_duration_seconds Duration of the scrape

# TYPE scrape_duration_seconds gauge

scrape_duration_seconds{instance="prometheus-k8s.openshift-monitoring.svc.cluster.local:9091",job="federate"} 0.002200895

# HELP scrape_samples_post_metric_relabeling The number of samples remaining after metric relabeling was applied

# TYPE scrape_samples_post_metric_relabeling gauge

scrape_samples_post_metric_relabeling{instance="prometheus-k8s.openshift-monitoring.svc.cluster.local:9091",job="federate"} 5

# HELP scrape_samples_scraped The number of samples the target exposed

# TYPE scrape_samples_scraped gauge

scrape_samples_scraped{instance="prometheus-k8s.openshift-monitoring.svc.cluster.local:9091",job="federate"} 5

# HELP scrape_series_added The approximate number of new series in this scrape

# TYPE scrape_series_added gauge

scrape_series_added{instance="prometheus-k8s.openshift-monitoring.svc.cluster.local:9091",job="federate"} 5

# HELP target_info Target metadata

# TYPE target_info gauge

target_info{http_scheme="https",instance="prometheus-k8s.openshift-monitoring.svc.cluster.local:9091",job="federate",net_host_name="prometheus-k8s.openshift-monitoring.svc.cluster.local",net_host_port="9091"} 1

# HELP up The scraping was successful

# TYPE up gauge

up{instance="prometheus-k8s.openshift-monitoring.svc.cluster.local:9091",job="federate"} 1

# HELP workload_cpu_usage_cores_sum

# TYPE workload_cpu_usage_cores_sum gauge

workload_cpu_usage_cores_sum{instance="prometheus-k8s.openshift-monitoring.svc.cluster.local:9091",job="federate"} 0.005644136111111113

# HELP workload_memory_usage_bytes_sum

# TYPE workload_memory_usage_bytes_sum gauge

workload_memory_usage_bytes_sum{instance="prometheus-k8s.openshift-monitoring.svc.cluster.local:9091",job="federate"} 9.8480128e+07

Metrics

The metrics from the in-cluster monitoring stack are scraped from the Prometheus federate endpoint.

The federation is a good fit to export aggregated metrics (see OpenShift docs for federation limitations) to your vendor of choice while keeping fine-grained metrics at the local cluster level (and avoid huge costs from your vendor). Our configuration scrapes platform and workload CPU and memory metrics.

To overcome the federation limitations for the user-workload metrics the Target Allocator from the OpenTelemetry operator can be used to directly scrape Prometheus metrics.

Logs

At the moment OpenShift logging (Vector) does not have capability to export logs in the OTLP format (missing OTLP sink in Vector) that could be consumed by the collector. However, an alternative solution is possible by deploying OpenTelemetry collector as a DaemonSet with FileLog receiver. The receiver scrapes pod log from /var/log/pods/* directory (see example config).

Conclusion

In this article we have covered two use-cases. The first is collecting in-cluster OTLP traces, metrics and logs and exporting all telemetry signals via OTLP from the cluster. We have described a solution for the first use-case for traces and metrics, but, at the moment, there is still an issue with sending logs to the log store (Loki). Future release of Red Hat build of OpenTelemetry will provide Loki exporter until OTLP ingestion is available in Loki.

The second use-case (exporting OTLP) is well supported for all signals, but there is a limitation for exporting all metrics from the in-cluster monitoring stack. The Red Hat build of OpenTelemetry allows users to export logs via OTLP when using the appropriate receivers, such as FileLog receiver. As of today, this cannot be achieved with Vector, since the OTLP sink is not yet implemented.

Configuration manifest files can be found here.

Discover more about our Observability components and upcoming developments.

We value your feedback, which is crucial for enhancing our products. Share your questions and recommendations with us using the Red Hat OpenShift feedback form. You can also express your interest in the RFEs shared in this article.

References

執筆者紹介

Pavol Loffay is a principal software engineer at Red Hat working on open-source observability technology for modern cloud-native applications. He contributes to and maintains the Cloud Native Computing Foundation (CNCF) projects OpenTelemetry and Jaeger. In his free time, Pavol likes to hike, climb, and ski steep slopes in the Swiss Alps.

類似検索

チャンネル別に見る

自動化

テクノロジー、チームおよび環境に関する IT 自動化の最新情報

AI (人工知能)

お客様が AI ワークロードをどこでも自由に実行することを可能にするプラットフォームについてのアップデート

オープン・ハイブリッドクラウド

ハイブリッドクラウドで柔軟に未来を築く方法をご確認ください。

セキュリティ

環境やテクノロジー全体に及ぶリスクを軽減する方法に関する最新情報

エッジコンピューティング

エッジでの運用を単純化するプラットフォームのアップデート

インフラストラクチャ

世界有数のエンタープライズ向け Linux プラットフォームの最新情報

アプリケーション

アプリケーションの最も困難な課題に対する Red Hat ソリューションの詳細

オリジナル番組

エンタープライズ向けテクノロジーのメーカーやリーダーによるストーリー

製品

ツール

試用、購入、販売

コミュニケーション

Red Hat について

エンタープライズ・オープンソース・ソリューションのプロバイダーとして世界をリードする Red Hat は、Linux、クラウド、コンテナ、Kubernetes などのテクノロジーを提供しています。Red Hat は強化されたソリューションを提供し、コアデータセンターからネットワークエッジまで、企業が複数のプラットフォームおよび環境間で容易に運用できるようにしています。

言語を選択してください

Red Hat legal and privacy links

- Red Hat について

- 採用情報

- イベント

- 各国のオフィス

- Red Hat へのお問い合わせ

- Red Hat ブログ

- ダイバーシティ、エクイティ、およびインクルージョン

- Cool Stuff Store

- Red Hat Summit